Transformers and Hugging Face Pipelines: Python Tutorial

Why Beginners Use Transformers and Hugging Face Pipelines

Hugging Face saves time. You do not need to train anything. You load a model that is already trained on millions of sentences. The pipeline function handles small steps like cleaning text, splitting it into tokens, and running the model. You focus only on writing the input and reading the output. This makes the whole process simple for beginners and even for kids who want to learn how computers understand text.

Setting Up Google Colab for Transformers and Hugging Face Pipelines

Google Colab already gives you Python, so you only need to install the transformers library. You run one simple command. After the installation finishes, you import the pipeline function and start using models. It works on a normal internet connection and a normal CPU, so no extra setup is needed.

Install Command for Transformers and Hugging Face Pipelines

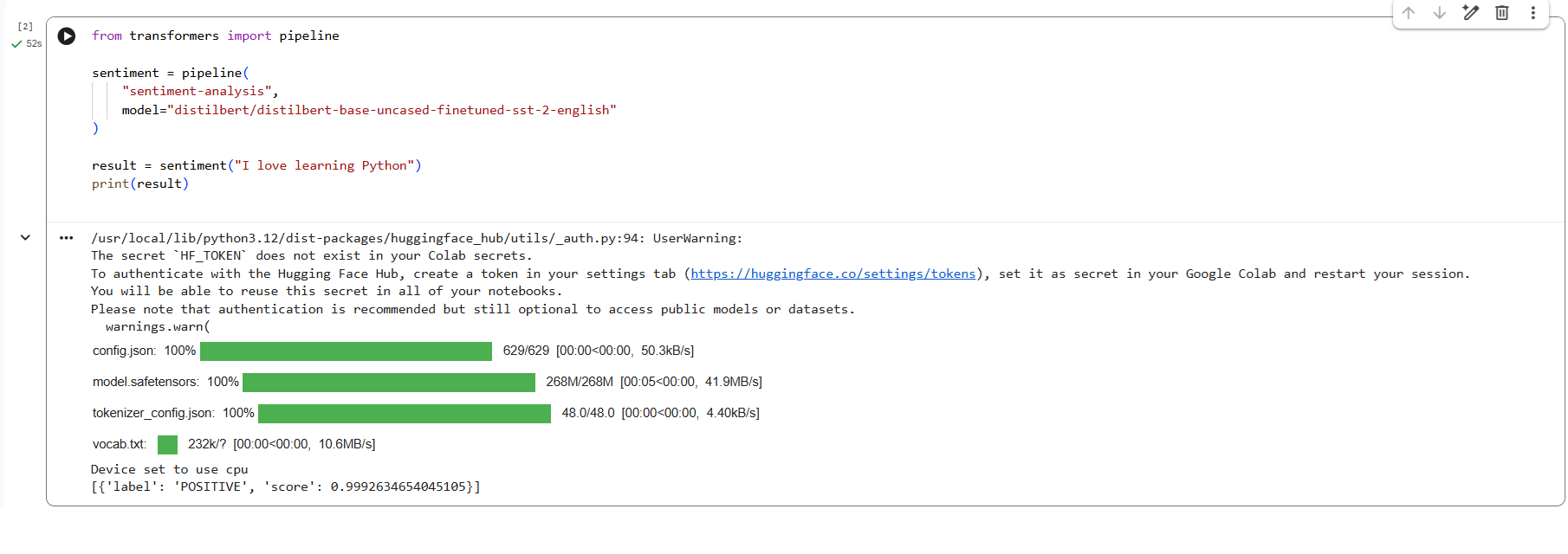

SENTIMENT ANALYSIS

Sentiment analysis checks the feeling inside a sentence. It tells you if the sentence sounds positive or negative. After installing the library, you load a sentiment model and run it. The model reads your text, understands the meaning, and gives a clear result with a confidence score.

Code:

Explanation: Sentiment Analysis with Python:

The import line brings the pipeline tool into your code. The variable sentiment creates a ready model that knows how to understand emotions. It reads your text and returns a label such as positive or negative. The print line shows you the result. Even a child can change the input sentence and test different emotions.

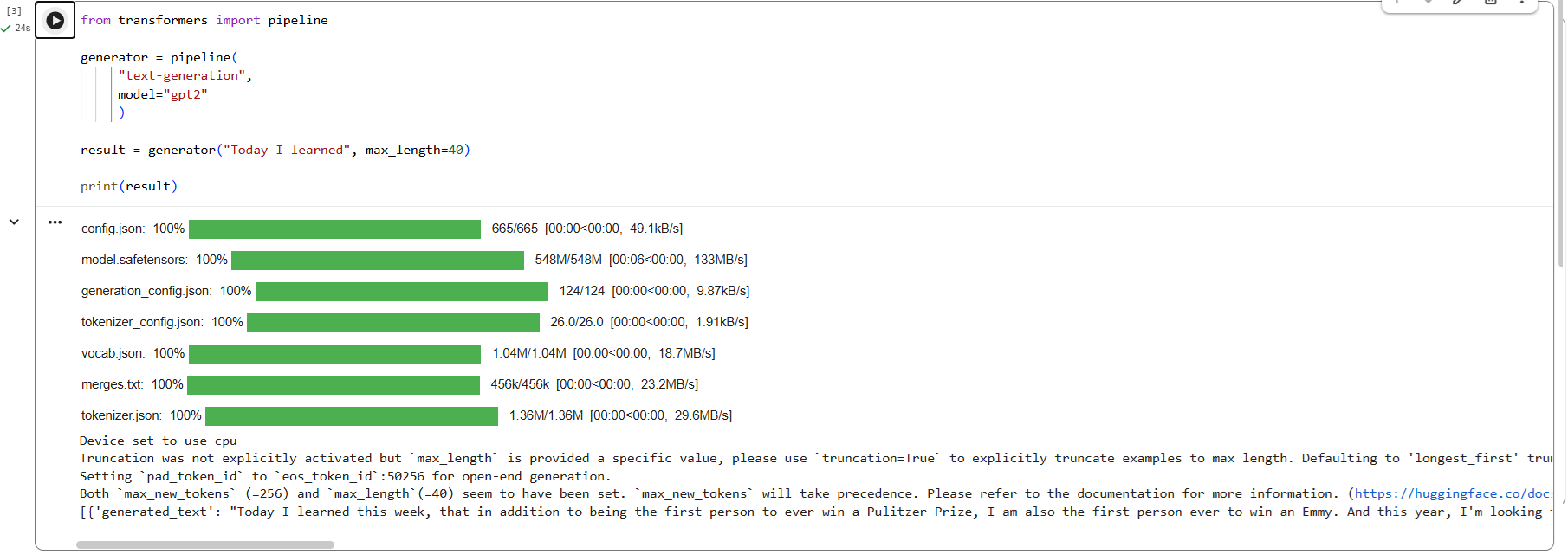

TEXT GENERATION

Text generation creates new text from a starting line. You write the first few words, and the model continues the sentence. It predicts the next words based on patterns it learned during training. This teaches beginners how computers write simple stories or answers.

Code:

The pipeline loads a model trained to create text. It reads the starting sentence and keeps writing until it reaches the length limit. The max_length value controls how long the final output will be. You can write simple prompts and watch how the model continues them.

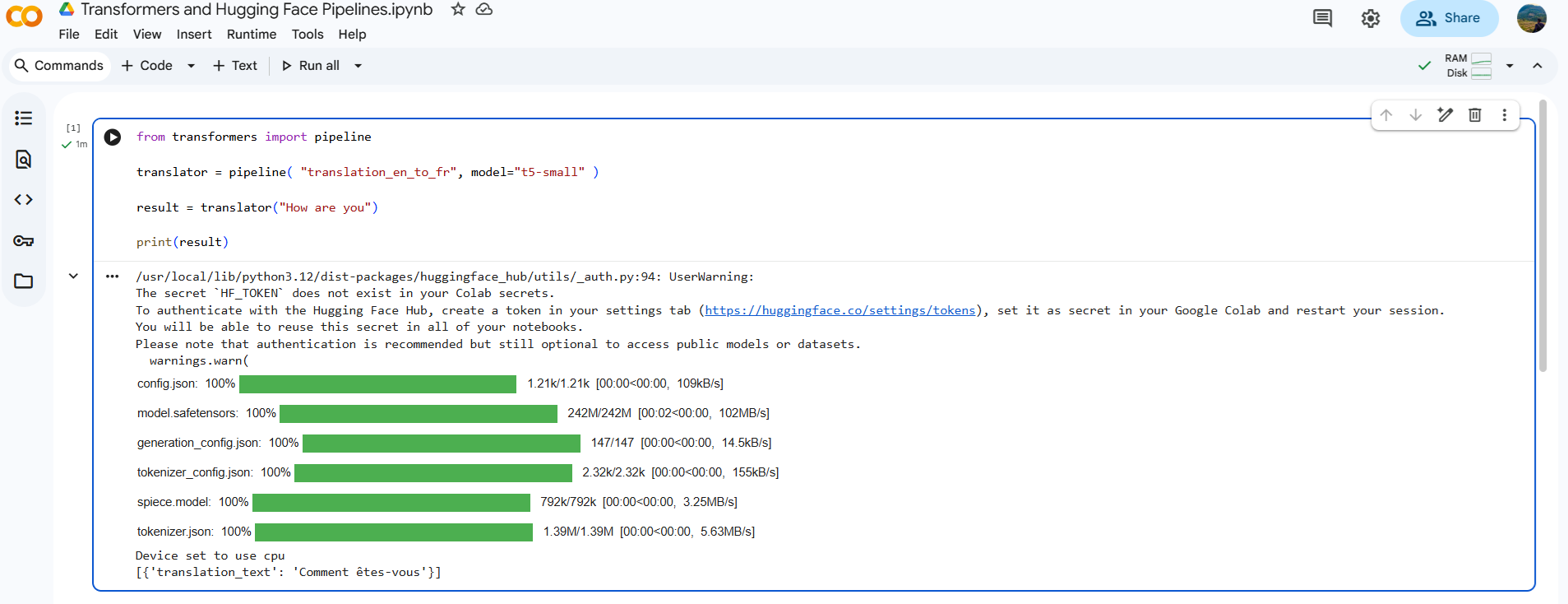

TRANSLATION

Translation changes text from one language to another. With pipelines, this becomes a small task. The model reads the English sentence and prints the French version. This example shows how easy it is to try language tasks in Colab.

Code:

Explanation:

The pipeline loads the translation tool. The model reads the English words and converts them into French. The result appears as a short and clear sentence. You can type any small English line and see how it translates.

SUMMARIZATION

Summarisation turns a long text into a short version but keeps the main ideas. It helps beginners see how computers make quick notes from big paragraphs.

Code:

Explanation:

The pipeline loads a smart model that loves making short summaries. It reads your paragraph, spots the most important parts, and writes a quick note. With max_length=20, it never goes over 20 words. min_length=10 makes sure the summary isn’t too tiny. do_sample=False keeps the result neat and the same every time.

If the summary still looks a little long, the code simply takes the first sentence and ends it with a dot.

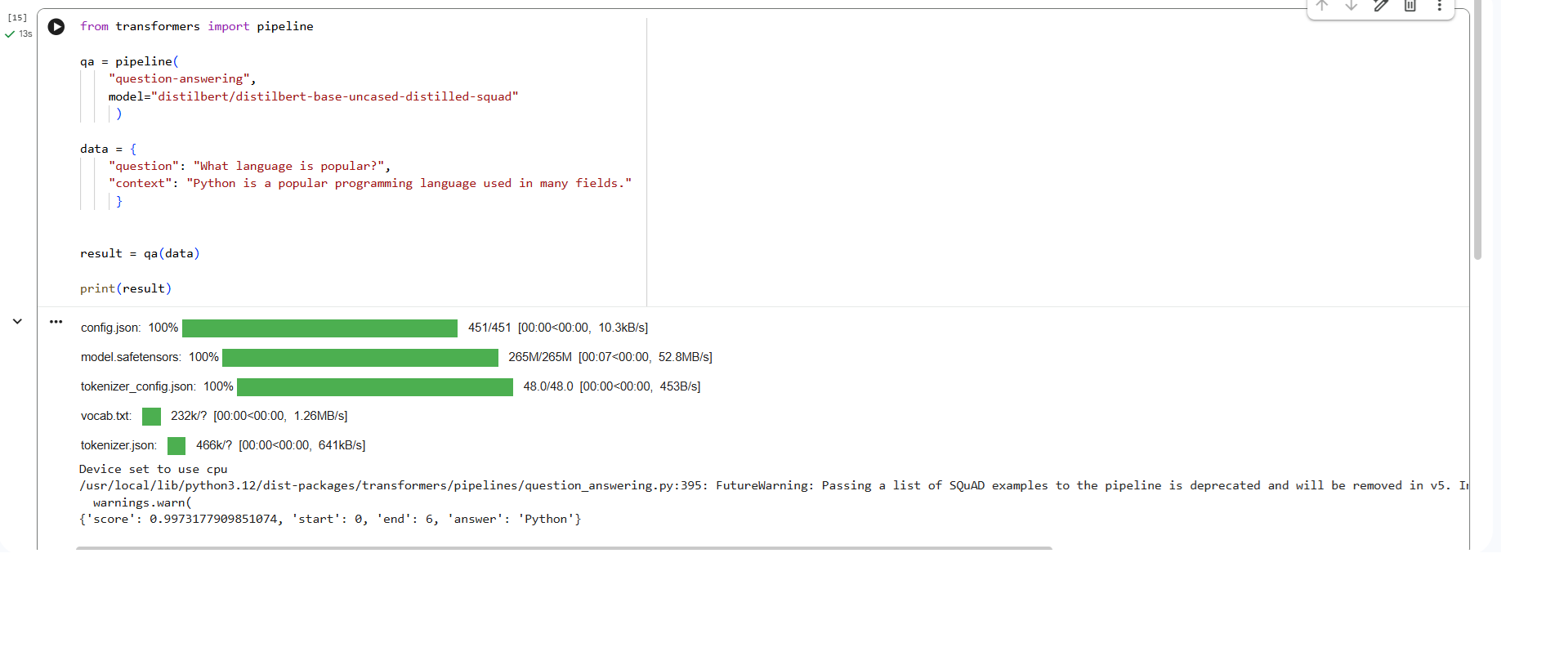

QUESTION ANSWERING

Question answering reads a passage and finds the answer inside it. You give a question and a context. The model searches the text and returns the exact answer.

Code:

Question Answering Explanation:

The model reads both the question and the passage. It searches inside the text and picks the correct phrase. It shows the answer without guessing outside the provided context. This helps beginners learn how computers understand questions.

Running This in Google Colab with Transformers and Hugging Face Pipelines

You open a new notebook. You install the transformers library. You paste each code block and run it. You change the input text to see how the model responds. You observe the output and learn how each task works. This hands-on approach helps even children understand the basics of natural language processing.

Summary of Transformers and Hugging Face Pipelines

Transformers help computers understand and work with text. Hugging Face pipelines make these models easy to use. You only write simple code, and the model handles the hard work. These tools help in many tasks like emotion checking, writing new text, translating languages, making summaries, and answering questions. With Google Colab, you run everything online without setup problems. This makes the learning process smooth and friendly, even for young beginners.

Conclusion

You just saw how a few lines of Python code can make a computer feel emotions, write stories, translate languages, summarise paragraphs, and answer questions exactly like a human. All of this is possible today with Hugging Face Transformers and zero complicated setup.

This is the magic of modern NLP: powerful, free, and beginner-friendly.

If you loved playing with these examples and want to turn this curiosity into a high-paying data career, ConsoleFlare is here to guide you step-by-step. Our mentors help school students, college freshers, and working professionals master Python, NLP, machine learning, and real industry projects in just 5 – 6 months.

Start your journey today. One small line of code can change everything.

Ready to build your future in data? Visit www.consoleflare.com.

Follow us on Facebook | Instagram | LinkedIn for daily Python tips and free live sessions!